Facebook and Instagram ban 'white nationalism and separatism'

Facebook has been pilloried for the livestream of the New Zealand mosque attack.

Facebook and Instagram are expanding their ban on white supremacy on their platforms, almost two weeks after a shooter associated with such ideology committed the worst terror attack in New Zealand history and livestreamed it on Facebook.

"We're announcing a ban on praise, support and representation of white nationalism and separatism on Facebook and Instagram, which we'll start enforcing next week. It's clear that these concepts are deeply linked to organized hate groups and have no place on our services," the company announced in a post on its newsroom site on Wednesday.

The livestream of the attack on two New Zealand mosques, which killed 50 people and injured dozens more, drew worldwide attention for both the ability to have the event broadcast and for how long the content stayed up on social media platforms, also including YouTube and Twitter.

The company said they had always cracked down on white supremacy, but admitted it did not use the same tools to combat similarly couched extremist content.

"We didn’t originally apply the same rationale to expressions of white nationalism and separatism because we were thinking about broader concepts of nationalism and separatism -- things like American pride and Basque separatism, which are an important part of people’s identity," the Facebook post said.

"But over the past three months our conversations with members of civil society and academics who are experts in race relations around the world have confirmed that white nationalism and separatism cannot be meaningfully separated from white supremacy and organized hate groups," the statement said. "Going forward, while people will still be able to demonstrate pride in their ethnic heritage, we will not tolerate praise or support for white nationalism and separatism."

We also need to get better and faster at finding and removing hate from our platforms.

The announcement struck some experts as overdue.

"The very idea that there is a significant difference between white separatism, white nationalism, and white supremacy is a rhetorical game played by white supremacist groups, who are looking to shape the public opinion on their movement. Facebook is finally seeing what researchers and anti-racist activists have known for decades, that there is no possibility of white nationalism that does not rely on genocidal ideation," Joan Donovan, director of the Technology and Social Change Research Project at Harvard Kennedy’s Shorenstein Center, told ABC News.

Last week, the company said its artificial intelligence technology failed in removing the terror broadcast on its platform, which Facebook said was viewed almost 200 times while it was live, then an additional 4,000 times before being taken down, according to Facebook.

"We also need to get better and faster at finding and removing hate from our platforms. Over the past few years we have improved our ability to use machine learning and artificial intelligence to find material from terrorist groups. Last fall, we started using similar tools to extend our efforts to a range of hate groups globally, including white supremacists," Facebook's Wednesday post said.

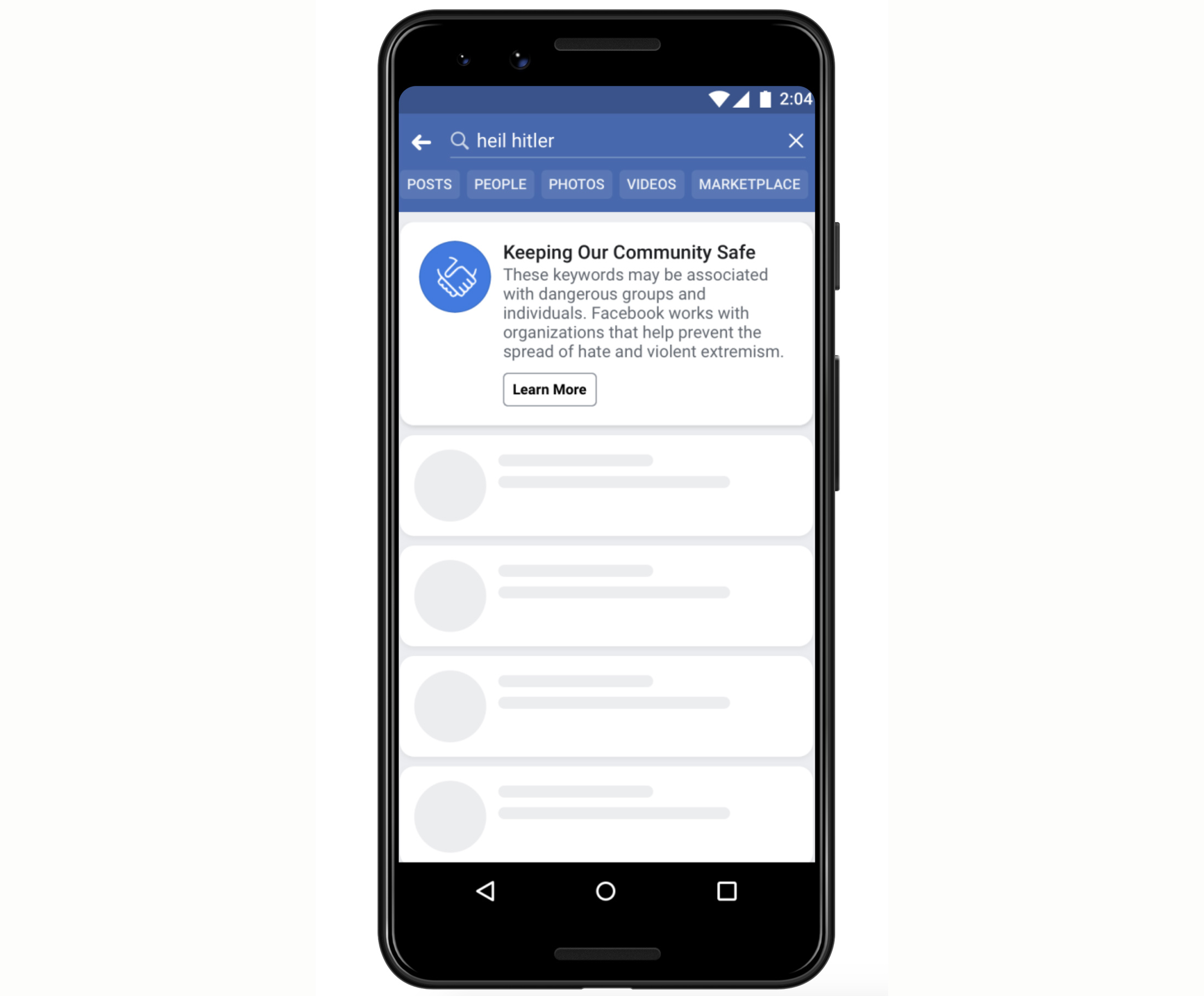

Facebook also said it would attempt to redirect and connect "people who search for terms associated with white supremacy to resources focused on helping people leave behind hate groups " including Life After Hate, "an organization founded by former violent extremists that provides crisis intervention, education, support groups and outreach."