Why 538's forecast hasn't moved much post-debate

Polls move a lot in the fall, and the fundamentals are still good for Biden.

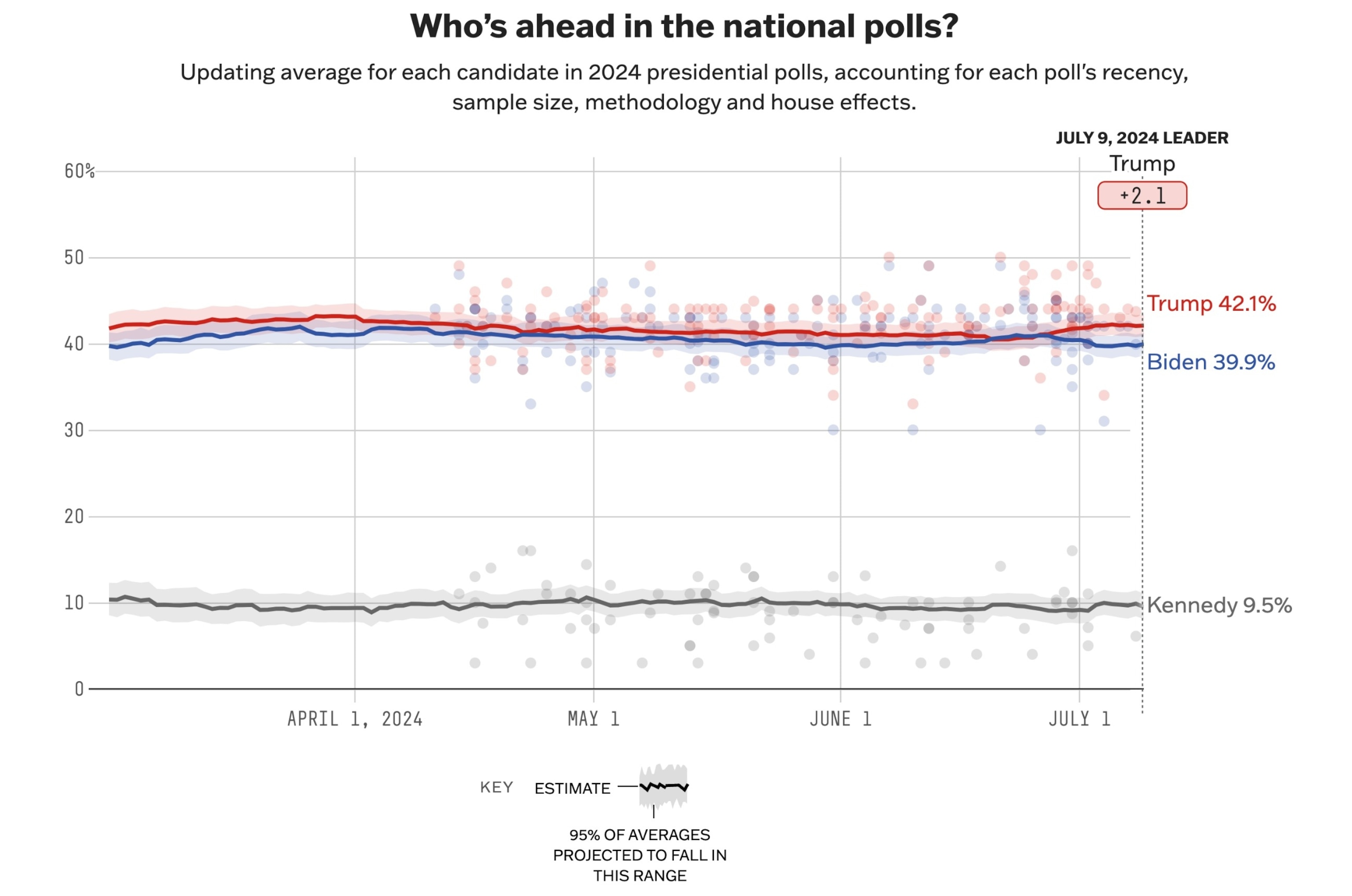

According to 538's average, former President Donald Trump's margin in national polls has grown by just about 2 percentage points since June 27, when President Joe Biden delivered a poor performance in the cycle's first presidential debate. Biden's current 2.1-point deficit is the worst position for a Democratic presidential candidate since early 2000, when then-Vice President Al Gore was badly lagging Texas Gov. George W. Bush, according to our retroactive polling average of that race.

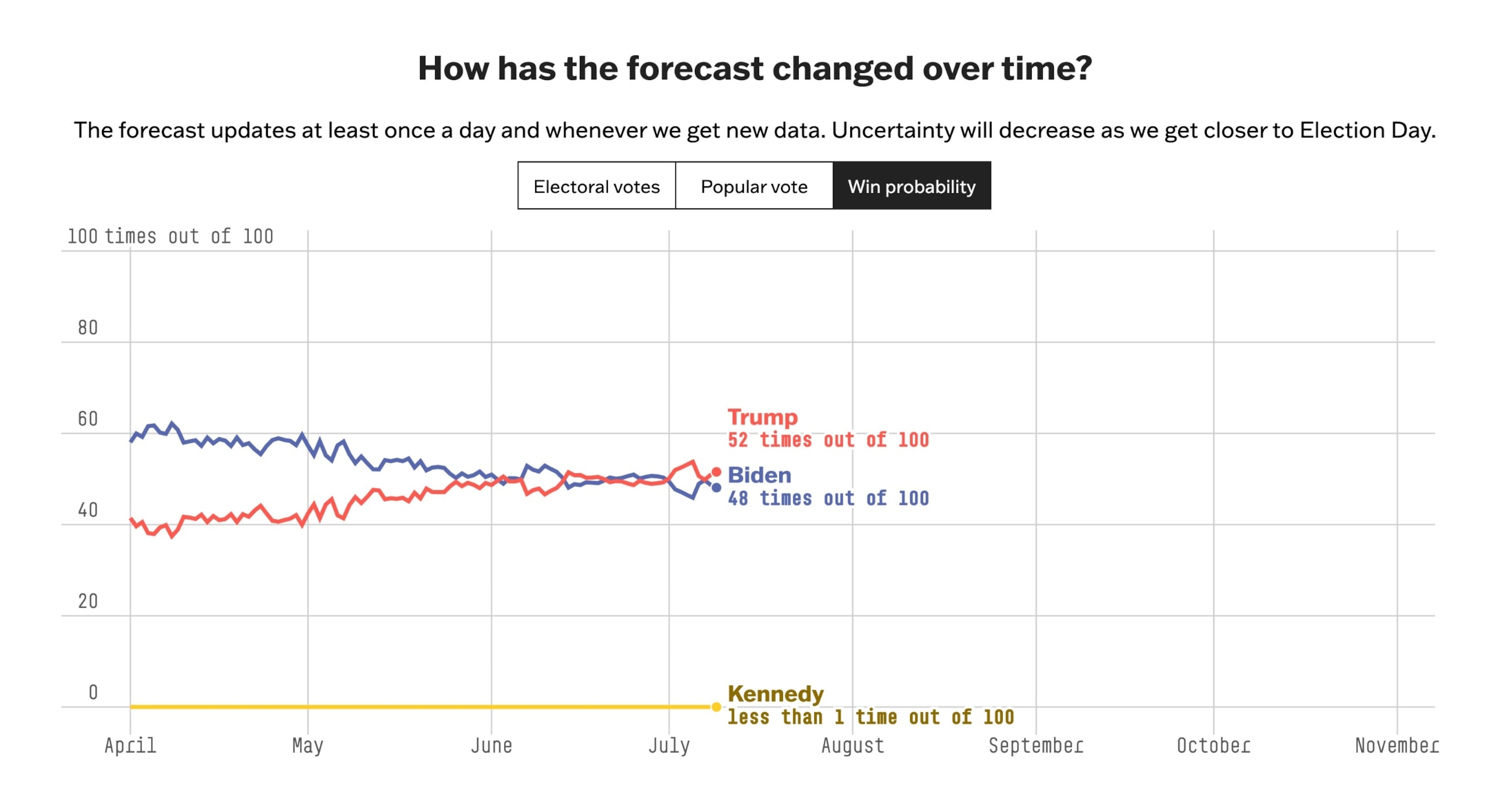

Yet our forecast for the 2024 election has moved very little. On debate day, we gave Biden a 50-out-of-100 chance of winning the majority of Electoral College votes. That chance briefly dipped as low as 46-in-100 on July 5, but it now stands at 48-in-100. At first glance, this lack of movement is confounding: If Biden has limped from roughly tied to down more than 2 points in national polls, shouldn't his chances of winning have sunk by more than a few points?

Well, not necessarily. The "right" amount of movement in a forecast depends on how much uncertainty you expect there to be between now and the event you're trying to predict. It's probably easy for you to predict how hungry you'll be tomorrow morning, for example — a pretty low-variance prediction (unless maybe you happen to be traveling internationally or running a marathon). But some things, like medium-range stock returns, are much harder to predict.

Predicting what public opinion will be four months from now is also difficult. On one hand, that's because small events — like debates — can cause large changes in the polls. But it's also difficult because the polls themselves are noisy. There are many sources of uncertainty that all have to be combined properly, and forecasters make many informed but imperfect choices in figuring out how to do that. (This is true whether you have a mental model or a statistical model, like we do at 538.)

To make things easier to digest, let's think about our main sources of uncertainty about the election in two parts.

First, there is uncertainty about how accurate polls will be on Election Day. This is somewhat easy to measure: We run a model to calculate how much polling error there has been in recent years and how correlated it was from state to state. This model tells us that if polls overestimate Democrats by 1 point in, say, Wisconsin, they are likely to overestimate Democrats by about 0.8 points in the average state — and by closer to 0.9 points in a state like Michigan that's demographically and politically similar to America's Dairyland. On average, we simulate about 3.5-4 points of poll bias in each of our election model's scenarios — meaning that, about 70 percent of the time, we expect polls will overestimate either Democrats or Republicans by less than or equal to 3.5 points and, 95 percent of the time, we expect that bias to be less than 8 points.

Those are pretty wide uncertainty intervals — from what I can tell, they're about 25 percent bigger than those in some of the other election forecast models out there. One reason they are so large is that 538's model more closely follows trends in the reliability of state-level polls. It's really this simple: Polls have been worse recently, so we simulate more potential bias across states. And though our model could take a longer-range view and decrease the amount of bias we simulate, such a model would have performed much worse than our current version in 2016 and 2020. We think that, even if polls end up being accurate this year, we'd rather have accounted for a scenario in which polling error is almost 50 percent larger than it was in 2020 — like it was in 2020 compared with 2016.

But the second source of uncertainty about the election — and the bigger one — is how much polls will move between now and Election Day. By forecasting future movement in the polls, we effectively "smooth out" bumps in our polling averages when translating them to Election Day predictions. Thinking hypothetically for a moment: If a candidate gains 1 point in the polls, but we expect the polls to move by an average of 20 points from now to November, the candidate's increased probability of victory will be a lot lower than if we anticipated just 10 points of poll movement.

Today, we simulate an average of about 8 points of movement in the margin between the two candidates in the average state over the remainder of the campaign. We get that number by calculating 538's state polling averages for every day in all elections from 1948 to 2020, finding the absolute difference between the polling average on a given day and the average on Election Day, and taking the average of those differences for every day of the campaign. We find that polls move by an average of about 12 points from 300 days until Election Day to Election Day itself. This works out to the polls changing, on average, by about 0.35 points per day.

True, polls are less volatile than they used to be; from 2000 to 2020, there was, on average, just 8 points of movement in the polls in the average competitive state from 300 days out to Election Day. But there are a few good reasons to use the bigger historical dataset rather than subset the analysis to recent elections.

First, it's the most robust estimate; in election forecasting, we are working with a very small sample size of data and need as many observations as possible. By taking a longer view, we also better account for potential realignments in the electorate — several of which look plausible this year. Given the events of the campaign so far, leaving room for volatility may be the safer course of action.

On the flip side, simulating less polling error over the course of the campaign gives you a forecast that assumes very little variation in the polls after Labor Day. That's because the statistical technique we use to explore error over time — called the "random walk process" — evenly spaces out changes in opinion over the entire campaign. But campaign events that shape voter preferences tend to pile up in the fall, toward the end of the campaign, and a lot of voters aren't paying attention until then anyway. Because of this, we prefer to use a dataset that prices in more volatility after Labor Day.

As you can see, both the modeled error based on 1948-2020 elections and the modeled error based on 2000-2020 elections underestimate the actual polling shifts that took place in the final months of those elections. But the underestimation of variance for the 2000-2020 elections is particularly undesirable, since it pushes the explored error lower than even the most recent elections. So for our forecast model we choose to use the historical dataset that yields more uncertainty early, so that we get the right amount of uncertainty for recent elections later — even though we are still underestimating variance in some of the oldest elections in our training set. In essence, the final modeled error that we use ends up splitting the difference between historical and recent polling volatility.

Now it's time to combine all this uncertainty. The way our model works is by updating a prior prediction about the election with its inference from the polls. In this case, the model's starting position is a fundamentals-based forecast that predicts historical state election results using economic and political factors. Then, the polls essentially get stacked on top of that projection. The model's cumulative prediction is more heavily weighted toward whichever prediction we are more certain of: either the history-based fundamentals one or the prediction of what the polls will be on Election Day and whether they'll be accurate. Right now, we're not too sure about what the polls will show in November, and that decreases the weight our final forecast puts on polls today.

The reason, in other words, that our forecast hasn't moved much since the debate is that a 2-point swing in the race now does not necessarily translate to a 2-point swing on Election Day. The only way to take the polls more seriously is simply to wait.

For fun, though, let's look at what our forecast would say with different model settings. I ran our model four times: once as is (with the 8 points of remaining error), one with a medium amount of poll movement (about 6 points between now and November), one with rather little movement (4 points) and finally one version with both little poll movement and no historical fundamentals — purely a polls-only model.

The results of these different model settings show us that having less uncertainty about how the campaign could unfold increases Trump's odds of winning. That's because he's leading in polls today — so making our prediction of the polls on Election Day more closely match their current state pulls Biden's vote margin down, away from the fundamentals and closer to what would happen if the election were held today. And when we remove the fundamentals from the model completely, we get an even higher probability of a Trump victory.

And this is the root of why 538's forecast has been more stable than others: Most other forecasts simply take current polls more seriously. That is not necessarily the wrong prediction about the future; we just found that less error did not backtest well historically. Many different statistical models can be somewhat useful at explaining uncertainty in the election, and with few historical cases, it's impossible to say that one of us is right or the other is wrong.