Normalization of vaccine misinformation on social media amid COVID 'a huge problem'

"They talk about things like music and sport ... you are part of a community."

Social media posts containing vaccine misinformation not only have increased since the pandemic began, they're more likely to coexist alongside less extreme content, effectively normalizing them and possibly delaying wider acceptance of a COVID-19 vaccine, experts told ABC News.

Analysts with Graphika, a firm that tracks social media misinformation, said that members of the anti-vaccine community, some already with large followings, have since absorbed individuals previously entrenched in similar groups tied to wellness or alternative medicine or unfounded conspiracy theories.

Melanie Smith, head of analysis at Graphika, said anti-vaccination communities now engage online more with posts about pop culture, celebrities and politics, producing tailored messages that can amplify their cause. Posting a wider array of content is a strategy used by other fringe online groups, including some tied to white supremacy or extremist views, Smith added.

"It's indicative of any way that a community has been successful online, which is to focus on one specific belief or one specific narrative and then slowly expand it outwards," she said. "So [vaccines] won't be the only thing they talk about -- they talk about things like music and sport, so the idea is that you are part of a community that is larger than this one thing that brought you into it, which can be really strong for the drive to belong to something."

Despite hundreds of years of experience supporting the safety and effectiveness of vaccines, they're still the subject of many swirling conspiracy theories. Before the pandemic, most anti-vaccine sentiment on social media focused more on individual anecdotes of alleged harm tied to vaccinations, and most posts and reactions were contained within private groups among a much smaller audience.

"I think there is less emphasis on the potential for negative side effects and more emphasis on the agenda and the companies and individuals at play," said Smith, adding that she's seen a strong focus on Bill Gates and George Soros, billionaires about whom wild conspiracy theories have raged online for years.

Spreading vaccine misinformation also can lead to financial rewards, Smith noted, as users can monetize posts by asking for donations or using fundraising platforms.

Facebook, Twitter and YouTube have taken steps to tackle anti-vaccination misinformation during the pandemic, such as partnering with more fact-checkers and pledging to remove certain content. Researchers said they welcomed these moves, but the crackdowns just pushed these groups to use alternative video platforms that have little to no moderation.

An ABC News analysis of private Facebook groups, some with more than 200,000 members, showed dozens of links, photos and videos daily casting doubt on the safety and efficacy of vaccines for the novel coronavirus.

Anti-vaccination propagandists also are preying on many people's sense of isolation, their anxieties and their fears, tied to the pandemic, said Claire Wardle, executive director of First Draft, a non-profit that combats disinformation.

"When people are under lockdown and feeling powerless, somebody tells you a narrative and you're like, 'Wow, there is something strange going on,'" she told ABC News. Because so few people truly understand how vaccines work, those casting doubt on them are "kind of this taking advantage of the absence of knowledge, and an understanding of vaccines work, by taking a kernel of truth and twisting it."

The complex regulatory framework surrounding vaccines also provides chances for misinformation to spread, according to Ana Santos Rutschman, an assistant professor of law at Saint Louis University, who said the number of people following anti-vaccine or groups on Facebook is "exponentially higher now" than in March.

"The sheer complexity of this field, technically and from a regulatory perspective, is a large portion of the problem," she told ABC News Live, adding that if she didn't teach on the subject she'd be "utterly confused as to what an 'authorized use' of an approved thing means."

Information initially shared via Facebook also may make its way to even more dubious corners of the internet, Rutschman added.

"Even within Facebook," she said, "the information is just under some sort of wall, and that happens to be a private group, which will then redirect you somewhere else, to a mailing list, to a private website. At that point, it's almost impossible to intervene because we can't really do anything legally that affects speech in those areas. So it's very complicated to trace it."

While Facebook has said the company has taken significant action to combat dangerous misinformation in private groups, Naomi Gleit, vice president of product and social impact, told ABC News in an interview that "we have a lot of work to do on groups."

Besides adding fact-checkers, Facebook pledged to remove misinformation specifically about COVID-19 vaccines, working with health authorities to identify harmful content.

"If you post content that violates our community standards, we will take it down. That's true if you post it anywhere, including in a private group," Gleit said. "In groups where they repeatedly post content that violates our community standards, we will take the entire group down."

YouTube also has been plagued by anti-vaccine content, experts told ABC News.

According to Wardle, YouTube is a "real problem" and the disinformation can be harder to detect because there could be a three- or four-hour video of someone talking, and the harmful information might not be picked up by automated systems.

"In many communities, those broadcasts are very popular," Wardle added. "So how does YouTube or researchers or journalists understand what's going on on a platform that's so difficult to research or to make sense of?"

A crackdown on vaccine misinformation on YouTube, noted Graphika's Smith, just pushed popular anti-vaccine proponents toward using Bitchute, a video-hosting site that allows users to monetize their content. Bitchute didn't respond to a request for comment from ABC News.

YouTube, which was purchased by Google in 2006, vowed to delete any COVID-19-related content that "poses a serious risk of egregious harm," including spurious claims about vaccines.

But the videos persist, from accounts with thousands of subscribers.

After contacted by ABC News, YouTube removed a video in which an interviewee claimed that the pandemic was effectively over. The video had been on the site for weeks, amassing hundreds of thousands of views. Similar videos remain.

A spokesman for YouTube said its COVID-19 misinformation policy applies to all content, including comments on videos, and that the company has removed "hundreds of thousands of videos containing COVID medical misinformation to date."

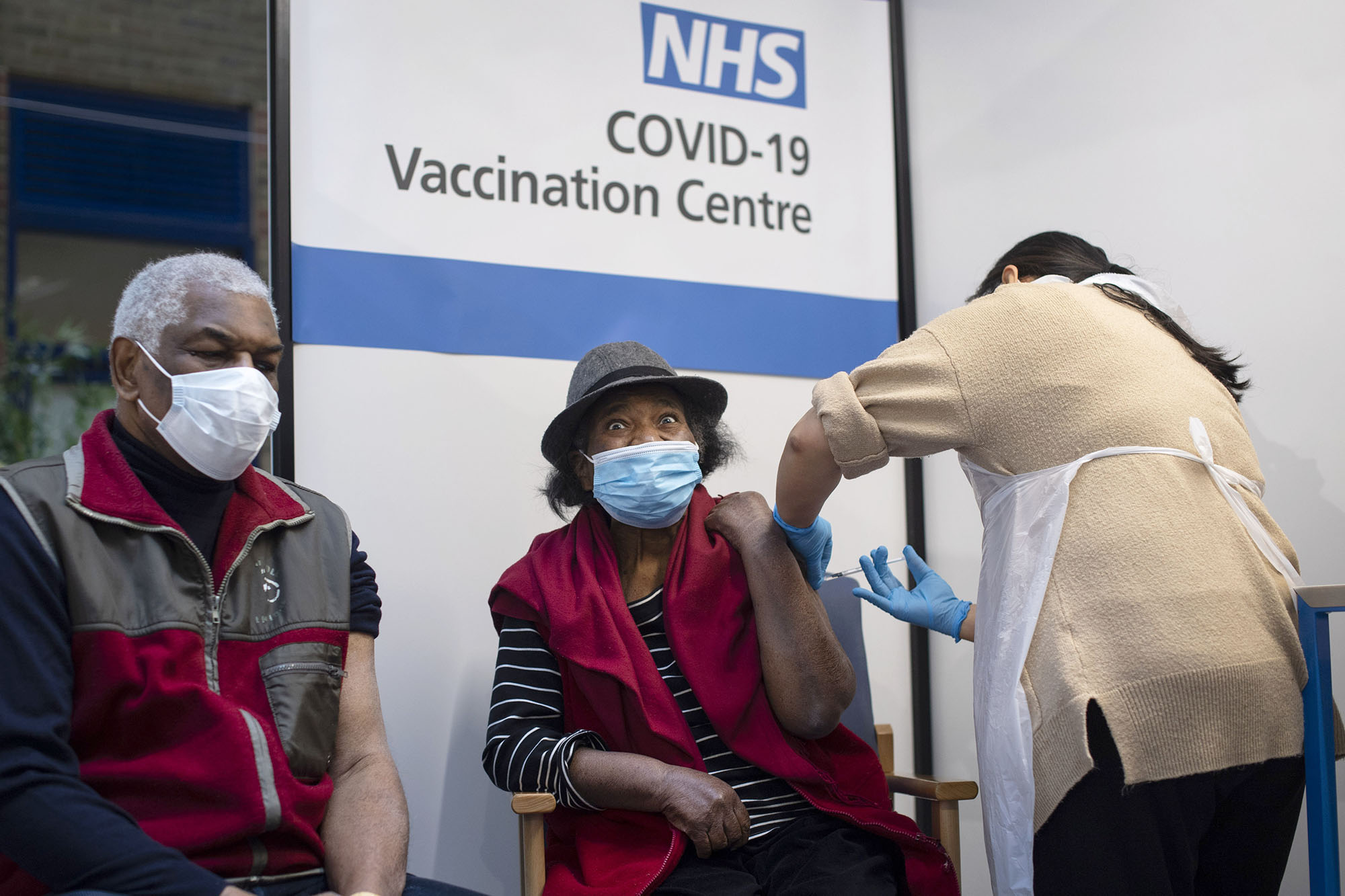

The issue of vaccine hesitancy grows more important by the day. On Tuesday, shortly after the first person in the U.K. received the vaccine, a doctor who has expressed skepticism over coronavirus vaccines was a witness at a Senate Homeland Security and Governmental Affairs Committee. Dr. Jane M. Orient used her time before the committee to promote unproven treatments and question the efficacy of COVID-19 vaccines.

Experts told ABC News they fear the problem will only worsen without direct intervention. Looking toward 2021, Wardle said, there needs to be a society-wide conversation and decisions made on what kind of online speech is permitted "because at the moment the platforms are making the decisions."

"We're starting to see the implications of that very kind of open position on speech that emerged 10 years ago from the platforms, and now we're sitting here thinking, 'Well, half the population might not take a vaccine because they've been reading information online that's false,'" she said. "That's a huge problem."

ABC News' Fergal Gallagher contributed to this report.