Buffalo shooter's livestream sparks criticism of tech platforms over content moderation

The attack spurred fears over the monitoring of livestreams and radicalization.

The 18-year-old suspect who allegedly gunned down 10 people in a mass shooting at a supermarket in Buffalo, New York, on Saturday had, authorities say, an assault-style rifle, body armor, a tactical helmet -- and a small camera.

The horror that followed became the latest mass shooting simultaneously broadcast online. Twitch, the Amazon-owned platform on which the video appeared, said it took down the broadcast after less than two minutes.

But that duration gave enough time for individuals to download and repost copies of the video, one of which was viewed more than 3 million times, after a link to the video on Facebook garnered more than 500 comments and 46,000 shares before its removal, the Washington Post reported.

Plus, a 180-page document believed to have been published by the alleged shooter that included a litany of bigoted views said the writer had seen hateful messages on 4chan and other sites known for the appearance of white supremacist content, raising the possibility that he had been radicalized online. An additional 589-page document believed to be tied to the alleged shooter included postings by the alleged shooter on Discord, a social media platform.

The suspected shooter is now facing murder charges to which he entered a not guilty plea.

The episode drew renewed criticism of tech platforms and urgent calls for scrutiny over the moderation of videos and messages posted online, which can quickly spread to a wide audience and possibly fuel copycat attacks. The uproar arrives at a moment of public reckoning over content moderation, as Tesla CEO Elon Musk has used his $44 billion bid for Twitter to voice his skepticism of platforms taking a broad role in removing posts.

House Speaker Nancy Pelosi told ABC News' "This Week" on Sunday that social media companies must balance free speech with concerns over public safety. During the same show, New York Gov. Kathy Hochul criticized how hateful ideas spread on social media "like a virus" and called for accountability from the CEOs of social media companies.

Experts in online extremism told ABC News they hope the mass shooting on Saturday serves as a wake-up call to bolster the push for more rigorous moderation of online posts. But livestreams pose a particularly difficult task for those who police content on tech platforms, experts told ABC News, noting the challenge of monitoring and removing the posts in real time.

Further, online message boards that foment bigotry, such as 4chan, traffic in odious ideas that often stop short of violating the law, leaving the door open to such platforms with a more lax approach to monitoring content, Jared Holt, a resident fellow at Atlantic Council's Digital Forensic Research Lab, told ABC News.

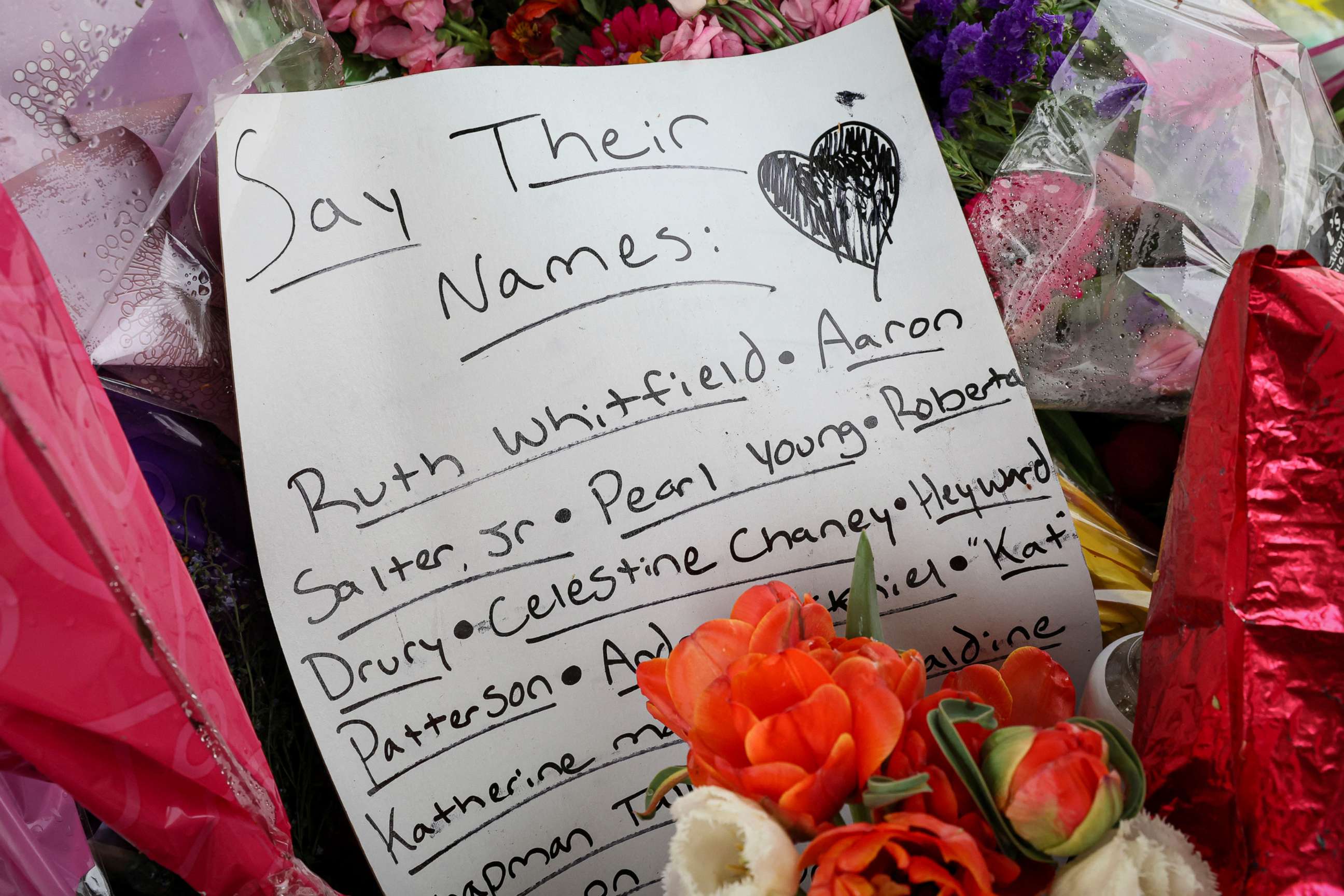

The mass shooting in Buffalo, which saw 10 people killed -- all of whom were Black -- and three others injured, comes three years after a self-identified white supremacist livestreamed a mass shooting at two mosques in Christchurch, New Zealand, which resulted in 51 people dead. Live video on Facebook of that massacre remained online for 17 minutes, far longer than the less than two minutes it took Twitch to take down the video from Buffalo on Saturday.

"It's an improvement, but needless to say, obviously it's not a perfect answer," Holt told ABC News. "Moderating live content has proven to be a massive challenge to tech platforms."

In general, tech platforms police content through both automated systems and manual decisions made by individuals, Alice Marwick, a professor at the University of North Carolina at Chapel Hill who specializes in the study of social media, told ABC News. Livestreams pose such difficulty because they can evade the automated systems, forcing platforms to rely on human moderators who sometimes cannot handle the overwhelming volume of incoming content, she said.

"The size and scale of the number of livestreams that there are on a daily basis make it impossible to moderate them completely," she said.

More than 8 million users broadcast live on Twitch each month, and the site features an average of more than 2.5 million hours of video every day, Twitch Global Head of Trust and Safety Angela Hession told ABC News in a statement.

"We've invested heavily in our sitewide safety operations and in the people and technologies who drive them, and will continue to do so," she said.

Platforms could further limit livestream incidents like what happened in Buffalo by implementing a time-delay for live footage, like television stations do, Marwick and Holt said. The companies could also ensure that users must be verified before gaining the ability to livestream, as YouTube does.

But livestreaming will not be removed from the platforms altogether, Holt said, citing companies like Twitch that depend on livestreaming for their business. "The cat is out of the proverbial bag," he said.

Even a brief livestream can end up reaching a large audience. As noted, in the case of the video of the shooting in Buffalo, a copy of the livestreamed video received millions of views after a link on Facebook helped drive traffic to it, the Washington Post reported.

A spokesperson for Meta, the parent company of Facebook, told ABC News that the company on Saturday quickly designated the event as a "violating terrorist attack," which prompted an internal process to identify and remove the account of the identified suspect, as well as copies of his alleged document and any copy of or link to video of his alleged attack.

The move ensures that any copies of or links to the video, writing or other content that praises, supports or represents the suspect will be removed, the spokesperson added.

In a statement, Twitch told ABC News: "We are devastated to hear about the shooting that took place in Buffalo, New York. Our hearts go out to the community impacted by this tragedy. Twitch has a zero-tolerance policy against violence of any kind and works swiftly to respond to all incidents."

"The user has been indefinitely suspended from our service, and we are taking all appropriate action, including monitoring for any accounts rebroadcasting this content," the statement added.

Content monitors also face a challenge from message boards and other sites that feature white supremacist ideology and can radicalize users. Since such content is offensive and dangerous but oftentimes legal, the onus falls on platforms to take an aggressive approach to remove it, Holt told ABC News. Not all platforms bring the same level of rigor to the task, he added.

The anonymous imageboard website 4chan is known for the appearance of hateful content. The alleged shooter in Buffalo named 4chan as a site he had visited. The website has not responded to ABC News' request for comment.

Hateful content can migrate from alternative platforms to more mainstream ones, allowing such messages to reach a wider audience before they are addressed, Holt said.

"There may be awful things on seedier internet platforms like 4chan," he said. "The internet doesn't exist as perfectly siloed platforms."

The alleged shooter also posted messages online in a private group on Discord, a social media platform. It's unclear who had access to the group. According to ABC News consultant and former Department of Homeland Security official John Cohen, Discord is a popular platform mostly with high school-aged teenagers and has been used to spread conspiracy theories.

"We extend our deepest sympathies to the victims and their families. Hate and violence have no place on Discord. We are doing everything we can to assist law enforcement in the investigation," a spokesperson for Discord told ABC News in a statement.

Online radicalization takes place over a prolonged period, affording multiple opportunities for platforms to step in, said Marwick, the professor at the University of North Carolina at Chapel Hill.

"When people do get radicalized online, it's not something that happens in an instant," she said. "Sometimes people like to think about this as a flash of lightning -- that's not how this works"

"It takes place over a period of time," she adds. "There are possible points of intervention before it gets to this point."